Labeling for Bee Detection

Labeling video at 60 frames per second with hundreds of bees in each frame presents unique challenges. Tracking bees' motion will require at least 60 frames a second, as they move quite fast. Most motion detection algorithms rely on frame-by-frame proximity. Most algorithms calculate the Euclidean distance and pick the closest object. Various search algorithms for selecting the closest object can improve performance. One such approach is described in a paper by L. Lacassagne. The higher framerate video is required to preserve the proximity between frames so a vector can be calculated for each bee.

Bees are small and there are often hundreds of them in each frame. Labeling a single frame precisely is a tedious process and can take several minutes. This means that labeling even a few seconds of video could take hours with common labeling software. Introducing the “Label Loop:”

The Label Loop

My so-called label loop is a form of semi-supervised learning. The idea is that you use a small set of data and label and train against this using some sort of transfer learning getting as close as possible to the actual data set. I will start with s short clip and grab about 10 frames. This gives me an initial model that will get close. Lower accuracy and probably lower precision as well. My initial model with 10 frames of data against the SSD ResNet50 model with Google’s “few-shot training detector” notebook. Although I found the code in this notebook to be fairly complicated for what it does, it is basically not restoring parts classifier from a checkpoint and then training only the classifier using a small sample of data. This is basically transfer learning, which could be classified as a form of few-shot learning.

I used this notebook as a starting place because I started with only 10 examples. Training a full model on 10 images doesn’t really produce great results. The checkpoint of SSD ResNet50 against the COCO dataset for fine tuning is close, but not really. Bees are small insects and most of the data in Google’s pre-trained model zoo are based on coco. These images have a few large objects that are trained against.

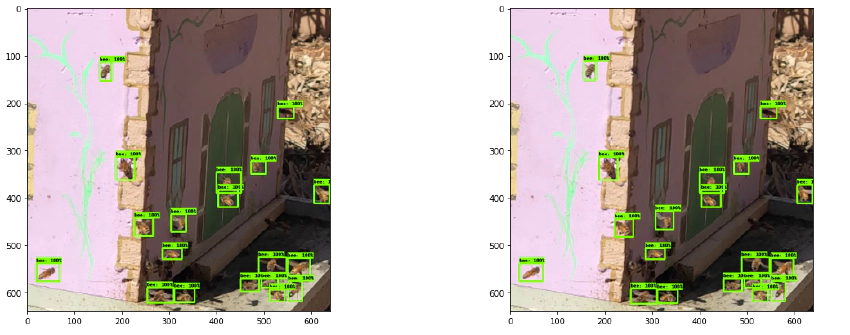

This initial model has an accuracy of about 80%. I am using this to generate labels for the next 100 images or so. After cleaning these labels up, I can train a better model. This can be used to generate labels for the next 1000 images. I then generate labels and train against the whole dataset. These labels are likely not accurate, and the recall is low. Thankfully, using an iterative approach like this is forgiving, and tends to “self-correct” over the iterations. Eventually, the missing bees are found and the recall comes up.

At this point, I can start the process of curating the dataset for proper distribution, etc. Given this is video, It is likely I don’t need to train against every frame as we are using image base frame-by-frame detection at this point. I hope to have a dataset that is several terabytes of curated data based on video from the front of my hives.

This has been a manual process and has taken a bit more effort than I anticipated. The traditional labeling tools just are not suited for labeling bees! This also highlights some of the issues with using machine learning to solve seemingly mundane problems.

My focus will be on a fast model that works in an edge scenario. The speed is more important than a model with a high score, or high recall. The idea is that it should be used as a detector on the front of a hive to gather data about the hive’s health. The detector only needs to actuate enough to generate meaningful data on the hive.